In recent years, flash-based SSDs have largely replaced disks for most storage use cases. Internally, each SSD consists of many independent flash chips, each of which can be accessed in parallel. Assuming the SSD controller keeps up, the throughput of an SSD therefore primarily depends on the interface speed to the host. In the past six years, we have seen a rapid transition from SATA to PCIe 3.0 to PCIe 4.0 to PCIe 5.0. As a result, there was an explosion in SSD throughput:

At the same time, we saw not just better performance, but also more capacity per dollar:

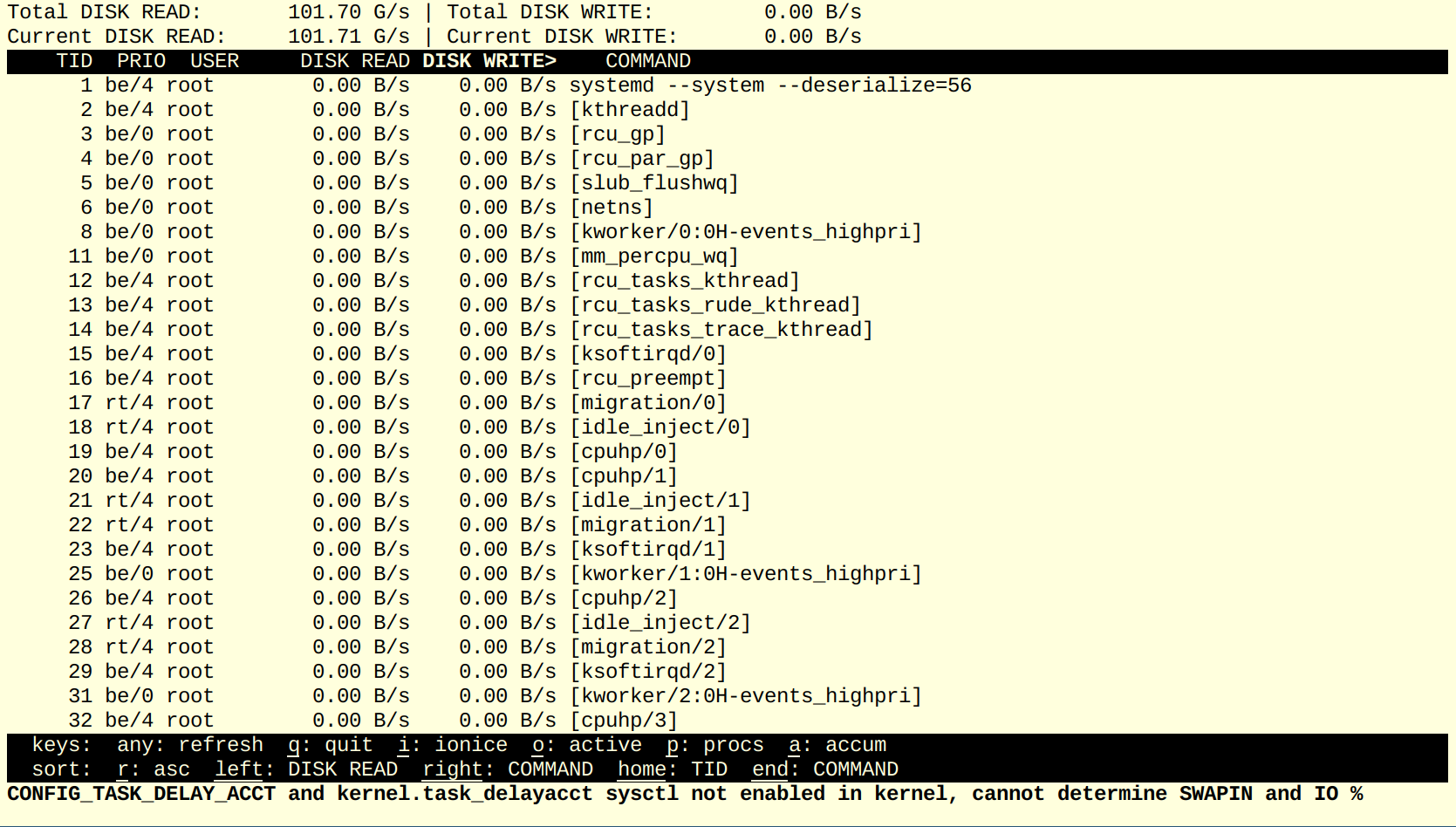

The two plots illustrate the power of a commodity market. The combination of open standards (NVMe and PCIe), huge demand, and competing vendors led to great benefits for customers. Today, top PCIe 5.0 data center SSDs such as the Kioxia CM7-R or Samsung PM1743 achieve up to 13 GB/s read throughput and 2.7M+ random read IOPS. Modern servers have around 100 PCIe lanes, making it possible to have a dozen of SSDs (each usually using 4 lanes) in a single server at full bandwidth. For example, in our lab we have a single-socket Zen 4 server with 8 Kioxia CM7-R SSDs, which achieves 100GB/s (!) I/O bandwidth:

AWS EC2 was an early NVMe pioneer, launching the i3 instance with 8 physically-attached NVMe SSDs in early 2017. At that time, NVMe SSDs were still expensive, and having 8 in a single server was quite remarkable. The per-SSD read (2 GB/s) and write (1 GB/s) performance was considered state of the art as well. Another step forward occurred in 2019 with the launch of i3en instances, which doubled storage capacity per dollar.

Since then, several NVMe instance types, including i4i and im4gn, have been launched. Surprisingly, however, the performance has not increased; seven years after the i3 launch, we are still stuck with 2 GB/s per SSD. Indeed, the venerable i3 and i3en instances basically remain the best EC2 has to offer in terms of IO-bandwidth/$ and SSD-capacity/$, respectively. Personally, I find this very surprising given the SSD bandwidth explosion and cost reductions we have seen on the commodity market. At this point, the performance gap between state-of-the-art SSDs and those offered by major cloud vendors, especially in read throughput, write throughput, and IOPS, is nearing an order of magnitude. (Azure's top NVMe instances are only slightly faster than AWS's.)

What makes this stagnation in the cloud even more surprising is that we have seen great advances in other areas. For example, during the same 2017 to 2023 time frame, EC2 network bandwidth exploded, increasing from 10 Gbit/s (c4) to 200 Gbit/s (c7gn). Now, I can only speculate why the cloud vendors have not caught up on the storage side:

- One theory is that EC2 intentionally caps the write speed at 1 GB/s to avoid frequent device failure, given the total number of writes per SSD is limited. However, this does not explain why the read bandwidth is stuck at 2 GB/s.

- A second possibility is that there is no demand for faster storage because very few storage systems can actually exploit tens of GB/s of I/O bandwidth. See our recent VLDB paper. On the other hand, as long as fast storage devices are not widely available, there is also little incentive to optimize existing systems.

- A third theory is that if EC2 were to launch fast and cheap NVMe instance storage, it would disrupt the cost structure of its other storage service (in particular EBS). This is, of course, the classic innovator's dilemma, but one would hope that one of the smaller cloud vendors would make this step to gain a competitive edge.

Overall, I'm not fully convinced by any of these three arguments. Actually, I hope that we'll soon see cloud instances with 10 GB/s SSDs, making this post obsolete.

Related Hackernews Discussion: https://news.ycombinator.com/item?id=39443679

ReplyDeleteCloud providers buy only high capacity drives, so they have less transfer speed per TB of storage available (compared to comodity drives). If the drive is share between multiple VMs, throughput is shared between them, and they have to obey SLAs.

ReplyDeleteThis could be also a way to manage wear of the drives.

I've seen speeds of 60k iops and 7 GB/s speeds on akamai cloud/linode. Even in the 5$ nanodes. Varies depending on the class of system you get, you get a random Zen 2 or Zen 3 class core and the better disks are on the Zen 3 instances.

ReplyDeleteStill pretty slow for databases compared to bare metal. The fractional vCPUs they sell are comparable with the disk difference.

Cloud resources are a pretty bad deal right now and don't reflect the gains from the last 3 years - which have been huge on the CPU side too.

Interesting. I can say that locally on my workstation, SSDs are beneficial for searching with tools like FileSearchEX, where one has to load the contents in memory of thousands of files over and over to find keywords. But I wonder, as the HN article states, is the reason because of a protocol in front of the actual drives?

ReplyDeleteCloud just means someone elses hardware and your virtual machine shares I/O with everyone. If you want faster, use your own hardware or keep syncing your cloud instance around until you find hardware without noisy neighbors.

ReplyDeleteThe important thing here is that even on the AWS metal instances which are supposed to give you one entire host and even if you choose an instance that has LOCAL NVMe SSDs, those are still ridiculously slow.

DeleteSSD's on all the clouds are Network SSD's and will never be performant as local disk on bare metal. Network will always be slower than local disk. Its just matter of time when these network volume will fall behind in numbers compare to local SSD's.

ReplyDeleteThis is not talking about EBS. This is talking about the "instance-local" SSDs.

DeleteAlso Networking on AWS is now faster than the supposed LOCAL NVMe SSDs that you are supposed to get with the instance types offering them. You can read at 80 GBit/s from S3 if you choose an instance with a lot of network bandwidth but only at 64 GBit/s from "local NVMe SSD" if you have an instance with 4 of them and put them in RAID 0... This is clearly ridiculous.

I don't think the higher ups at the Big Three Cloud Providers are ready for making the investment for the benefits that faster SSDs provide.

ReplyDeleteFWIW the value of cloud has gone so far away thanks to the vendor/ecosystem lock-in effect. The big three doesn't have to improve performance or lower prices to compete anymore, when so many companise have swallowed the "devops" buzzpill over having competent system admins and it infra engineers..

ReplyDeleteWith a 10+ billion record data file, I could never get Postgres for Azure to match bare metal without spending thousands per month—even when the "bare metal" was a corporate laptop with nVME running the Windows version of Postgres.

ReplyDeleteI look forward to more results on this topic. I probably need to re-read your recent paper(s) on what we can get from modern storage. The issues for me include:

ReplyDelete* QoS - high write rates can mean high GC rates which bring higher variance from flash GC stalls

* QoS, v2 - with an LSM, high write rates also mean high TRIM rates and the ability of a device to support TRIM varies, so your DBMS might have to learn this at run time or there will be stalls during TRIM. FusionIO spoiled us a long ago -- TRIM was always fast. Modern devices don't spoil us.

* backup/restore are hard with high write rates. If restoring from a snapshot then you need to somehow replay minutes or hours of logs to catch up

* replication -- both network capacity and the ability to replay the log on a replica are a challenge. There has been some recent work on parallel replication apply. We need more.

Hi Mark. Your points are all related to writes and make a lot of sense for write-heavy OLTP workloads. However, I don't see why cloud vendors don't offer higher read speeds. Viktor

ReplyDeleteFaster reads would be nice. We might never learn why without working there. Is it network capacity? The perf overhead on VMs? A bottleneck in EBS?

DeleteWhy don't you press Control + to a font size which suits you? The font size in this blog is quite normal.

ReplyDeleteAll NVME access in recent AWS instances is managed (“and secured”) by the Nitro backplane running a custom ARM chipset. It’s not networked SSD as another commenter suggests but it’s also not true local SSD. The timing of Nitro introduction and stalled SSD bandwidth is suggestive. I doubt the Nitro backplane has the capability to emulate NVMe at 100gbps. https://aws.amazon.com/blogs/hpc/bare-metal-performance-with-the-aws-nitro-system/

ReplyDeleteAs a sales from a public cloud provider(non big three) I can confidently say the amount of clients we have that need higher then 2gb/s storage is very very low, and in my 5 years here I have maybe seen 3 people who ask about iops details and SLA. In addition I have always heard our tech team be very cautious about providing higher speed storage, mostly due to fear of network congestion. Bare metal nvme machines seems like the only way to get really high speeds at our cloud at the moment, but this has uptime impact due to being a single machine.

ReplyDeleteI don't doubt that is true for many clients but the big 3 cloud vendors all compete (sell) block storage solutions based on high perf and high QoS - Azure Premium Storage, EBS Provisioned IOPs, Google Hyperdisk. Alas all of these are network attach storage and the focus of this post might be limited to local attach NVMe.

DeleteExadata runs in the Cloud and takes full advantage of NVMe performance with full redundancy and persistence of data even during failures and without downtime. Exadata is available in Oracle Cloud, in a data center of your choosing using Exadata on-premises or Cloud@Customer, and in Azure. Make sure to look at Exadata. It's ridiculously fast.

ReplyDelete*sigh* you cant pass 10 GB/s over a 125 MB/s wire. Its like you guys know nothing about computers. Computers in the "cloud" which is just fad talk for the network, run at the speed of the network. With VERY FEW exceptions, like army bases, high rises, and school campuses, MOST networks run at 125 MB/s. Even on hardware advertising higher speeds.

ReplyDeleteWhy is that not changing? Because you would have to dig up and rerun EVERY SINGLE WIRE, EVERYWHERE in the US. And that is COMPLETELY not happening. The same way Teslas aint gonna charge any faster. You will use the wires that are already there or you will use nothing. The wires which are already there run at 125 MB/s MAX. Always have. Get used to it. SSD speed always has been, and always will be a myth.

Note that we are talking about local SSDs here. Network speed does not matter at all for that. And in fact AWS does offer much higher network speeds than 1GBit, M6in for example has 200 GBit networking.

Delete